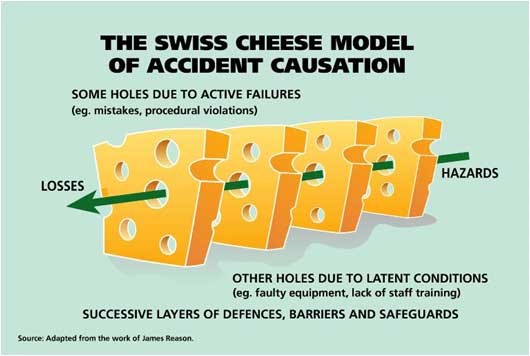

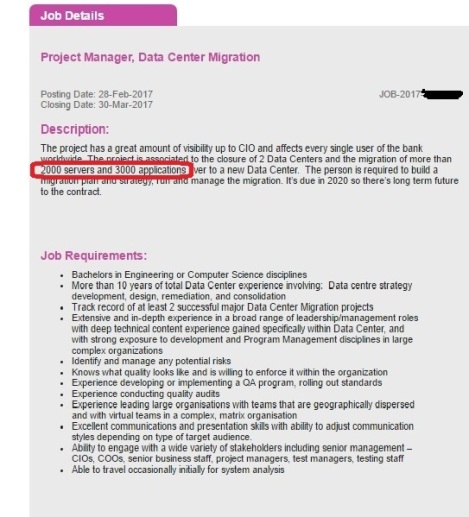

There are issues faced by yours truly and colleagues in data center build projects in China and elsewhere. Some of these experiences and lesson learnt will be shared in this post. For reason of protecting the people involved, some specifics are obscured (e.g. country/location) while not affecting the message being shared.

Labor Strike

At about half way into the build programme for a data center in a city in south pacific, the electricians union called for a strike because compensation negotiations with the electrical installation companies broke down for the four year collective agreement. The labor strike went on for 10 full days and 7 half days. For those half day strike, the electricians worked until 11:30am and walked off. Our project schedule had very small buffer. While the client is apprised of the labor strike situation but their legal counsels do not agree that this fall under force majeure condition of our agreement. We did manage to catch up by paying for some overtime days after the strike period was over and the representatives from the client delay their take-over date by four precious days and we avoided penalty. We should look out for and plan a bit more buffer whenever such collective agreement are up for renewal.

Budget

Different country has different practice when it comes to budget, in particular buffer. In Singapore, we do not budget for a buffer, it is usually budget in such an arrangement that should the budget not exceed by 5%, then it will be covered from a central fund or some prior arranged account for contingency.

When we worked on a project in Australia, the design firm had to explain to us that the buffer of 10% is expected to be used and savings, if any, are a blessing.

In China, the budget is fixed and cannot be exceeded and there is no buffer by the final round of budget review by senior management. The other thing about the budget number is that almost certainly all data center equipment vendor and contractors will learn of this number, somehow.

While on the topic of budget, the budget for data center design contract is roughly the same in Singapore and Australia, which is around 3-5% of the whole project cost.

In China, the guideline by the building regulations give non-mandatory guideline on all design contractors to be 3-5% as well, however the competition for contract and normally the awarded design contract comes up to under 3%, sometimes not even 1%. Quality and resource allocation by the design firm do suffer as a consequence of the cut throat competitive pricing by the data center mechanical and electrical design companies in China. For example, they will only send technical liaison to be on-site once or twice just for project meetings during the build phase as it is the norm in China that the main contractor takes over the drawings and be responsible for the drawings thereafter, the design company endorse the drawings after the build programme is done with very little work to verify or inspect. More on this in my future post.

Electricity Supply (last mile)

Securing power supply for the data center facility is critical and our Beijing project in an industrial park had it secured through another company that purely acts as go-between with the power company that supply to the industrial park. We were promised the MV electrical cables will be laid and connected within six months. One month before this six months was up, the power company called for a meeting and a third party electrical cabling contractor was invited by the power company to be in the same meeting. We were told that the power company requires this third party electrical cabling contractor to be engaged through us or our middle man company to finish the last mile of electrical cabling from nearest power substation to our premises and additional cost of 1Mil RMB are to be paid by us to this new party. We were shocked and various ways to escalate, negotiate to take away this new party, and so forth took another half a year before we relented and find the budget to achieve this. The worst thing is, a new problem crops up afterwards that the power company said since we have delayed in contracting to this new party, the electrical cabling path has to be changed as the previous planned-for path to our premises is not possible since it had been given to another electrical cable for a separate project. The situation now is, we and the adjacent building complex owner are to share a MV transformer room that is to be located in one of our neighbor’s building ground floor and then the MV cable run from their complex to ours. Again, it is a take-it-or-leave-it attitude from the power company. Our project ended up taking three years to complete. So China speed can be fast, but it can also be slow and end up being a lousy compromise.

New Rules retrospectively applied to existing site

On the same Beijing project mentioned above with the last mile electricity supply obstacle, we have another problem which was new regulations imposed after the Beijing TV tower fire incident (2009) that we need to have two sources of water for fire fighters to use. They issued the regulations and all building that are to be retrofitted are to comply. When we bought over the former textile building complex and submitted our plan to change of use, the industrial park management and the fire service bureau issued the directive that we are to comply with the new rules. The complex as-is has only one water pipe which according to the new regulations is insufficient provision. We either dig a well connected to swimming pool size water storage tank or have two swimming pool size water storage tanks. We did the latter. Our green grounds were dug up and we put in the two 30m X 20m X 4m tanks with associated water pumps.

On-site inspection is a must

On two separate occasions while in Shanghai, I inspected two green field sites. Supposedly one is zoned and ready and the draft drawings looks promising until I visited the site after a two hours drive. It was farm land, with old residential single-level building with village folks still around and no road has been built. The plans are just paper, nothing has been done yet. After 5 years, the project has not taken off from the paper.

In a tier-3 (it is a China definition of their city standard, tier 1 cities are the likes of Beijing, Tianjin, Shanghai, Shenzhen, tier 2 are usually the provincial capitals and more developed cities) city in Anhui, we are to retrofit the ground and second floors of one 12 years-old factory building. The paper as-built drawing plans took 5 days to locate from the state-owned conglomerate’s building management company. We were pondering how best to re-locate the water sprinkler pipes on the ground floor such that the no-water time can be reduced to the shortest possible duration. When the building management office folks came by to look at the water sprinkler pipe, they made a comment that the water sprinkler pipe is dry. I thought the water sprinkler is a pre-action dry-sprinkler system but puzzled as it is a factory building that do not require such a complicated system. It turns out that I was mistaken. The water sprinkler system is supposed to be charged with water, but the pipe had leaks and some pipe or valves were broken and it has been dry for quite some years.

When the fire service bureau representative came by to review our drawing plans for the retrofitted floor, he nary inspected the relocated water sprinkler system. I reminded myself to always look for the nearest exit out of the building everyday I go to that building.

On an data center site inspection in GuangDong province, I came across a case whereby the design company endorsed original documentation showed dual incoming MV electricity supply while in fact the building has only one electricity supply.

Defect

How each country handle defect list is different. In China, defect list is sometimes overlooked if the main contractor’s boss and the project owner’s big boss are friend and that was the reason for awarding to that main contractor in the first place. When the relationship turn soar for whatever reason, the defect list grows and grows and it was used as a reason for non-payment of remaining sums of the project. It is not good to mix business and relationship.

Protest

A few days before the Chinese New Year holidays, a main contractor asked the sub-contractor to hire some workers to stand in front of the gate of a Shanghai data center company to block incoming car and worker transport buses. The police were called and these protestors were moved to the side of the entrance. After two days of this organized protests, the protest stopped as the police warned the sub-contractor of serious consequences. A romor has it that the sub-contractor’s manager and his colleagues responsible for organizing the protesters were “dealt” with.

Impulsive Decision and Big Grand Upscale (高大上)

Impulsive decision making (拍脑袋做决策) without expert advice by key decision maker is a major problem in Chinese organizations. Many cloud data center projects were announced throughout China, while only a fraction are actually completed and in operation. Most of them have not reached 50% occupancy and are loss making. One organization I know has its cloud strategy in 2015 to have 30,000 racks capacity in 3 years, without regards to market demand and the worst thing is those cities it planned to build its large scale cloud data centers (6,000-10,000 racks capacity each) are in less developed cities which do not need such scale without consideration of the competitors whom are not sitting still. The leaders wants big grand projects, the saying in Chinese is 高大上.

Finding the Gems among all the “promising” projects

In China and elsewhere in Asia, it is a challenge to sieve through all the announced plans by the local governments, the cloud players, and big data center park scale developers to see the gems and find the worthy project. There are data center projects that are more sure footed and data center service providers that are on firmer grounds and growing from strength to strength.

Inspur had built a data center facility with capacity of 8,000 racks in ChongQin for China Unicom as a built-operate model. (reference 3). Inspur announced plans to build seven large data center facilities and 50 smaller ones through-out China. (reference 3, 4)

Inspur had built a data center facility with capacity of 8,000 racks in ChongQin for China Unicom as a built-operate model. (reference 3). Inspur announced plans to build seven large data center facilities and 50 smaller ones through-out China. (reference 3, 4)